Data collaboration is a key driver of modern organizational success. No aspect of modern life is untouched by the need and importance of data collaboration. It is really expansive, covering how we share and edit documents (whether that be Word, Excel, text or JSON), how we write databases and how we code.

I would like to present a somewhat analytic philosophical take on what data collaboration is and how we should think about it. I believe that this will help us not only understand what we are doing now, but where we need to go in the future to really achieve a more enlightened approach to data collaboration.

We can read this as a query at the world w, which gives us back all substitutions of variables that would make the query true at world w.

Here we have a world in which Jane and Joe are Jim’s parents, and Tim and Elena are Kate’s parents. That’s sensible enough, but we may need to update this world when Jim and Kate have children.

This requires a state transition. We will go from a world

Let’s say σ says that we are going to add a child Sarah of Jim and Kate. We might write this as:

Different worlds have different facts. And we move from one world to the next through an arrow (which we could call an accessibility relation). The arrow is transitive, in the sense that we can follow the arrow through any number of hops.

But these worlds we have pictured above are arranged in a linear fashion. This is how we usually think of our own world. That is, we generally think of there being a single timeline, and everything that happens is shared for all participants. As those with a bit of experience with quantum mechanics may know, this may very well not be true! However, it is mostly true at human scales. And it is certainly convenient to think in this fashion as it is simpler. And simpler is better when it’s still right enough. In the words of Albert Einstein:

This non-linearity leads us to branching worlds. And here is where git gets really interesting. We can reconcile histories by creating new shared understandings through the process of rebases and merges.

Each state transition from one commit to another can be described as some number of text line deletions and some number of line additions. If these regions are non-overlapping we can perform a three-way-merge.

This new commit essentially makes the diagram commute. We can think of the new merge commit as arising from either of the two branches, as a patch, both arriving at precisely the same final state.

This ability to get a shared world from divergent ones is what forms the backbone of git’s success in acting as a collaboration tool. We collaborate on code by finding acceptable shared worlds after having made state transitions whose granularities reflect the cadence of program writing.

There are also very clever ways of relaxing how our worlds come to a shared agreement. Instead of having to reduce immediately to w₂, we can decide that our algorithm only needs to eventually get us there. Intermediate reads, in different localities, will not get the same world!

Sometimes this is good enough, and sometimes good enough is better because it’s faster. If you have a monotonically increasing counter for instance, you don’t care if you add one now, or add one later. The sum at the end will the same. People missing a bunch of up-votes when they check their social media will not cause serious concern. They’ll see them in a few hours and perhaps they will never be the wiser.

Google sends on the updates to the clients allowing client updates to be fixed by transforming them. Hence the name OT: Operational Transformation. We can get a linear world by taking google’s view as canonical, with the order of messages received. But each client can update their view independently after receiving the updates such that they are appropriately transformed.

Again, we are finding a way to agree on our final world state – this time by reordering transaction updates such that we don’t have to agree them all in advance, which would make our application feel very laggy and it would not have the illusion of being a shared resource at all!

Another way to achieve this same effect is with a CRDT. A CRDT1 builds operations that commute in the first place. That is, it doesn’t matter the order of the operations, when they are applied they arrive at the same final state. Of course this commutivity places a lot of restrictions on what types of operations we can do. We certainly can’t have commuting bank transactions where you pay for your latte from your empty bank account and then get paid. But it can do a lot, and if you can make your σ’s commute then life is really great.

The Agreed Universe

Collaboration requires having a shared view of the world. It doesn’t require that we share absolutely everything. Coming to a shared concept of the state of something is what data collaboration is all about. To do this, we need a bit of exploration as to where our assumptions line up with those of others. In order to collaborate we need ways to minimize the stomping-on of toes. Collaboration is therefore, necessarily, a bit of a dance. There are many ways to view this dance, but I think a special mention should go to git. Git really stands out as a tool for data collaboration on one of the most complex types of data we have: Code. And the way we do this collaboration is very different from the way we collaborate with one of the other extremely widespread data collabration tools: the database. Now there are various kinds of databases, various replication technologies, some very sophisticated and various levels of isolation given which change the way we collaborate. In order to think about data and change, we’re going to stray into a universe of multiple worlds which was perhaps best conceptualized by Saul Kripke from whom we will borrow liberally. This philosophical framework is very general, but it can also be very precise. This makes it a useful lens through which to view our activities.Linear Worlds

The very concept of isolation, a core concept in database systems, and the I in ACID, stems from the notion that databases should have one current state of the world. Our transactions exist to construct movement in this one world. Isolation allows us to ignore how others are changing the world and focus on our own problems. Since nobody likes having to deal with others’ problems, this is good news. It is really convenient when transactional commits happen with reads and writes that are scheduled relatively close to each other and where scaling vertically is not an issue. It works so well that databases working on this model are absolutely pervasive in our computer architectures. Each database query tells us something about the state of the current world. For instance, if we have a world w we can ask a question parent(X,Y) where we get all X Y for which parent(X,Y) is true.Diagram:

w ⊢ parent(X,Y) ← {[X = jim, Y = jane ], [X = jim, Y = joe],

[X = kate, Y = elena], [X = kate, Y = tim]}

w to w' via some state transition σ (sigma).

w w'

⋆ → ⋆

σ σ ̣≡ insert:parent(sarah,jim) ∧ insert:parent(sarah,kate).

Now we can get a different answer at w and w’ for a question such as the following:

w ⊢ parent(sarah,Y) ← {}

w' ⊢ parent(sarah,Y) ← {[Y = jim], [Y = kate]}A theory should be as simple as possible, and no simpler.

Locality in the Simulation

When we try to simulate our understanding of the state of the world we inevitably find that we can’t be everywhere at once. Locality is a factor of the real world that is inescapable. At a physical level, this is because the speed of light provides an upper limit to our communication times. The fundamental locality of operations is something which we must constantly contend within software engineering and systems design and architecture. The difficulty of cache coherence is perhaps legendary and reflects this fact. Databases are no strangers to the problem. But it also arises with “real-time” collaboration software like google docs or Google Sheets.Code

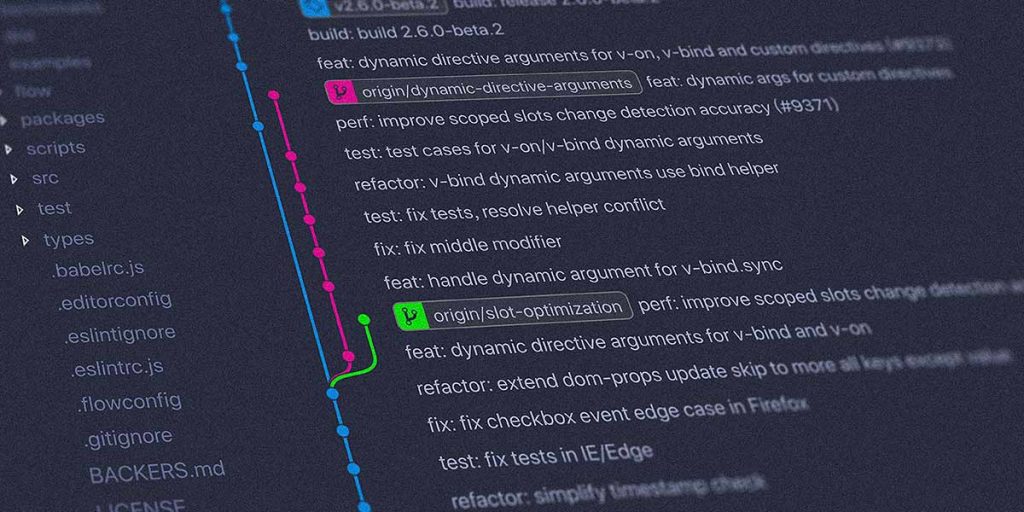

The way that we program with computer code is step orientated. In compiled languages, we have to make a syntactically complete update, a commit as it were, run the compiler and get an output. In dynamic languages like JavaScript and Python, we generally update the state of the program code and re-run the code after a similarly syntactically complete change. Even in the now relatively rare image-based programming models which were used in Lisp and SmallTalk for instance, updates would happen to a chunk simultaneously – perhaps a function, class definition, or a procedure. The naturality of this chunk-at-a-time transition is why git’s commits are natural units for revision control. It also means that it is convenient for our changes to be done in a local version which we edit in a single chunk, and only later attempt to reconcile with changes that might be made by others. It is possible to have simultaneous editing of code by multiple participants using other ideas such as CRDTs (Conflict Free Replicated Data Type) or OTs (Operational Transformations) which we will look at in a bit (these also deal with the problems of locality by the way), they simply aren’t that useful since we don’t know when the code is ready to compile because the commit granularity of characters is too fine to make sense. Here it is useful to think of these commits as worlds. What is true at a world is a state of a set of files. What we can query is the lines in these files. We call these worlds things like77a407c or perhaps we give them temporary names like origin/main to refer to the most recent version. They are also distinctly not linear.

main

⋆ → ⋆ → ⋆ → ⋆

↘

⋆ → ⋆

dev main

⋆ → ⋆ → ⋆ → ⋆ → ⋆

↘ ↗ ⇑

⋆ → ⋆ merge commit

devReplication

Replication of databases means that we try to get local copies of a database that are all the same, in some sense, and on some timescale. One simple approach uses a primary for transactions and potentially multiple backups which replicate the latest state of this database (for some notion of latest). The strategy here is to keep the linear timeline (which we saw above) which is to be organized by a single transaction processing server for some transaction domain or shard. This tends to be much easier than having some sort of communication that would resolve issues with transactions. However, more elaborate approaches which involve coming to a consensus also exist. These make the timeline seem linear to the participants. But the secret underlying sauce in these algorithms is that the timeline is not linear: We are actually dealing with multiple worlds. Our task is to make sure that some agent processes can up with a way to arrive at a shared final state which all participants agree with. That is, the same final world state. (w₁ replicated)

w₀ → w₁ → wₐ → w₂

↘ ↗

w₁ → wₑ (w₁ replicated)

w₀ → w₁ → wₐ → w₂ ... wₓ (I eventually got joe's upvote)

↘ ↗

w₁ → wₑ ... wₙCRDT and OT

The illusion of a common resource which is provided by google docs is a fantastic productivity tool. You can co-edit a document in real-time and rarely does one think about where it is. But it is actually somewhere! More correctly, it’s multiple places at the same time, in multiple different worlds with different states. It is not a shared resource at all. Instead what we are doing is creating replicas with a transaction processing system that can re-order transactions. When I edit a document I create a number of edit operations. These edit operations are applied to my local copy of a document. I then send these to google’s servers.me:

w₀ → w₂ → wₙ

σ₀ σ₁'

google:

(joe,σ₁) (me,σ₀)

joe:

w₀ → w₁ → wₙ

σ₁ σ₀'What Pieces are Missing

I hope that seeing things laid out in a general framework that unifies these very disparate ways of collaborating has inspired some new ideas. It certainly has got me thinking about what we don’t have that we probably should have. What pieces are missing from the collaboration puzzle?Structured Patches

The first is the concept of structured patches. This issue is very close to my heart as it is what we are currently working on at TerminusDB, and I’ve written some preliminary thoughts about it in a discussion on patches. “There is nothing new under the sun” applies here. There are several excellent papers on the question of patches for structured data which I have pointed in my blog on syntactic versioning. There are example programs that use these approaches which are open source as well. However, I think it is fair to say that the use of patches on structured data has not hit prime-time. The tools to make use of it are not really there yet. But perhaps more importantly, the scope of its power is not at all appreciated. It is a way to communicate information in a way that can make explicit when things commute. That is, the conflicts which arise during merges in git are caused by non-commutative patches. And this is the same thing as a transaction that does not commute. This fact, now that we know a bit of Kripke semantics, should immediately remind us of the kinds of things we do in other circumstances when things do not commute!Kripke Query

The other glaring hole in our current tech which becomes obvious when we look at the states of the world as kripke structures is the ability to query through worlds. In git we actually have a fair number of tools for this.git log is all about the worlds. Our git commands can world-travel which is actually a super-set of time-travel. We can go to different branches, as well as different views of how the world evolved.

But git is a very limited sort of database. It essentially has chunks of text at worlds, with some associated metadata. With a real database that had the ability to travel through worlds, whole new avenues open up.

One of these is a modal logic query languages. Kripke semantics was originally devised by Saul Kripke to create semantics that could be used for modal logics. And one obvious modal logic which might be useful for databases is temporal logic.

What did we know at state w? This could be very important for auditing. What decisions were made at some point in the past relies on what knowledge they had at that point? If you don’t know what you knew, you can’t evaluate if you did the right thing at that time. Of course, this would seem to be an almost no-brainer for regulatory requirements.

This is potentially really powerful. A database constraint is generally formulated as a proposition that obtains in every world. If we know about our constraints as well as about our state transitions (patches) then we can know more about these.

But we can also potentially make constraints like eventually or other very powerful such statements such as are found in CTL.

Speaking in more practical engineering terms, we might ask for when a particular object was edited last, and by whom or what algorithm. Or when was the last time that a document referred to another document?