Last week we had our Webinar about DataOps. I ask my colleague – Dmytri Kleiner, Director Of Services at TerminusDB some questions about DataOps (mostly questions that myself and many of you have in mind).

You can access all our webinars as podcasts here, including this interview with Dmytri and a previous one with the founders of TerminusDB.

What is Dataops? What’s the difference between Devops and Dataops?

First, we have to know what is Devops. Developers and operations were once totally separate – there was a wall between and this caused difficulties as they couldn’t understand what was there and what the implications. It took too long and too difficult to get into production. Integration and delivery were painful things.

Then there come new inventions, in the developer community Git and GitHub became popular. While in the ops world, cloud computing became prominent. And there is infrastructure as code, so the two become closer and closer together.

So Devops is a change of the philosophy – Rather than having a developer and operations silo – you have within teams ability to combine the two silos. CI/CD is allowed with this pipeline approach. Development to testing to production is enabled and a pipeline is developed that involved various stages and the infrastructure can be also managed similarly.

More than software changes. Data also changes! This single pipeline model only assumes there are changes in source code but does not take into account the data changes.

In a content management system – you have live data which changes. You have workflows. Just like software development, it has stages. This is where DataOps arises. How do you manage the workflow between software and data that is also changing?

What makes Dataops in the spotlight? Why is it important (for businesses)?

Developers have Git and GitHub, and Ops have Jenkins and cloud providers like AWS. We have a lot of exciting tools for software developers and operation management to use.

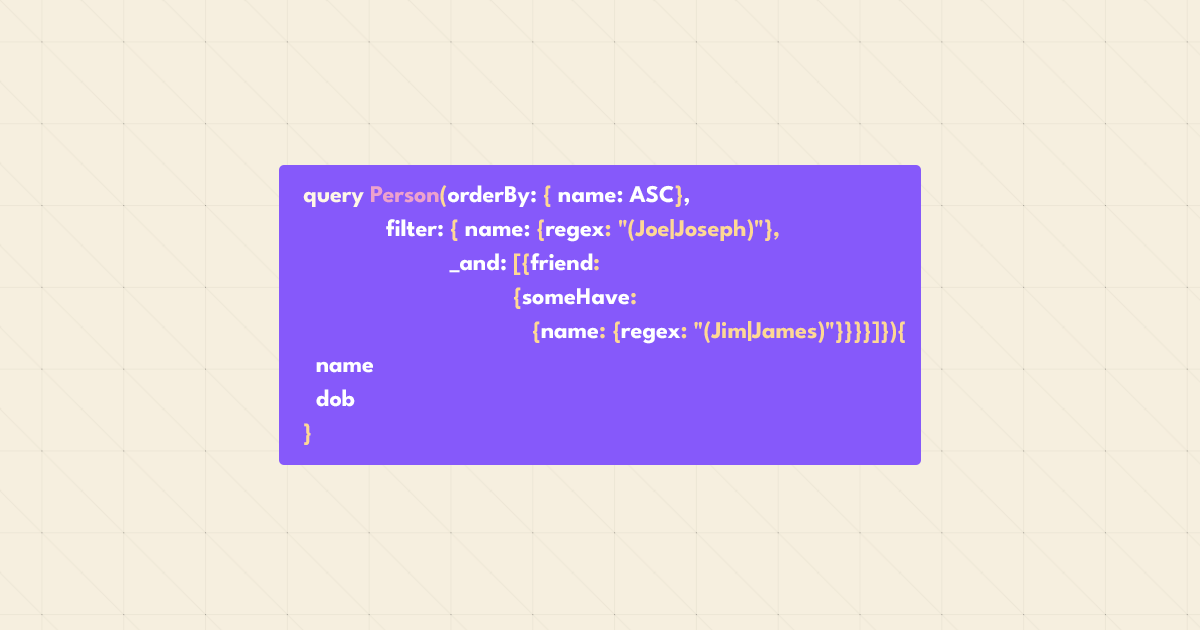

Sadly not so far for data people. Still stuck in SQL that is there forever. No workflows built into the software. Can do merge and testing in the data pipeline. This is basic now in Dev and Ops. But difficult in data. If I want to update a product in e-commerce – how do I do it? Do I do it in a dev environment, test environment, production environment? Need to fit workflow so marketing and others can check and test. Is the content ready to go? Need to model that into data. I can model my workflow with branches and clones. Hard to do the same thing with data. The common workaround is to do it by pushing into the data model itself. It is brittle and hard to change.

And the other problem is. When the software developer developed new features and it requires changes in schema and data structure for this feature to work. If you only have one production database – how do you do it if it is different from the production version of the software? Now they have achieved this by using a series of practices, like migrations, which are scripts that get pushed down the pipeline. Then add or remove versions to match the changed schema. The downside is DB can be offline for a while and it can get messy and fragile. It is a solution, but a bad one! Lack of better tooling. With branch merge and clone, it is all better and easier.

We need a production-like version of my DB for testing. Because we don’t want to test on a production version of DB, we don’t want to break it. Therefore, there’s a need to copy all data to develop the environment, which could be a huge and inefficient task in the data set is large. So far most of the tools that we are using are still behind to meet the requirements of these tasks.

Is Dataops different for a data science project and a software development project?

Data Science project tends to have more complicated queries, often more complicate schemas as well and perhaps lower velocity of data. The main point about it is not whether it is a data science project or an e-commerce project. It is whether this project has a complicated schema and what is the content velocity and how complicated is the workflow.

My observation is, data science project has a lower content velocity but not always, and maybe with a more complicated schema because what they are doing is more scientifically precise. But I think many of the Dataops can be applied to the data science projects as well. And the property in a data Science team there is a bigger problem in the workflow as they are not working with a DevOps environment where they can do some of the practices that I have described. So I can imagine the practices there could be even more ad-hoc.

What makes a successful Dataops?

Just like DevOps, the goals of Dataops is to eliminate silos and to create cross-functional teams and have full ownership of their tasks and all the capabilities of what they need to do. A successful Dataops team is like a successful DevOps team. They are allowed to do what they need to do: have the ability to write software, create infrastructure, as well as to manage data. They got the right mandate and authority to do what they need to do. They are empowered to do what they need to do. And they have all the capacities to do what they need to do.

What roles does Dataops play in remote working?

We are building new tools for remote work, they involved data, as well as software, involving Dataops into Devops, would be a good idea as lots of the software engineering works are involved with data. Data are commonly poorly managed. Data can be treated like software, and doing so will require new tools and lots of new practices.

On the other hand, the data science team needs to learn how to do perform DevOps practices, how to treat data as code, how to treat schema as code such that they can be populated down the pipeline, can be managed and workflow can be managed.

Do we need a specific person in a team to be in-charge of Dataops?

Organizations can have champions specialist in the practice in, someone who is passionate about moving forward. But I believed in cross-functional teams. In the team, the responsibility should be shared. Need a team that works together to learn a range of relevant skills, to help each other out and share knowledge and understanding of practices.

Any insight about the future of DataOps?

There is going to be advanced organizations and others that are slower. The ability to process and collaborate on data is a key competitive advantage. If they don’t get the Dataops and DevOps right, they will face consequences in the market. Because their competitors will outperform them. These companies will be further along forced by the market. Others in academic and technical settings will have a harder time convincing management to invest in developing the skills and toolsets. Because it is not perceived as critical to advantage in these places. But I think it will be short-sighted. Performing better and better will become more and more important as we go forward.

Anything else you would like to add?

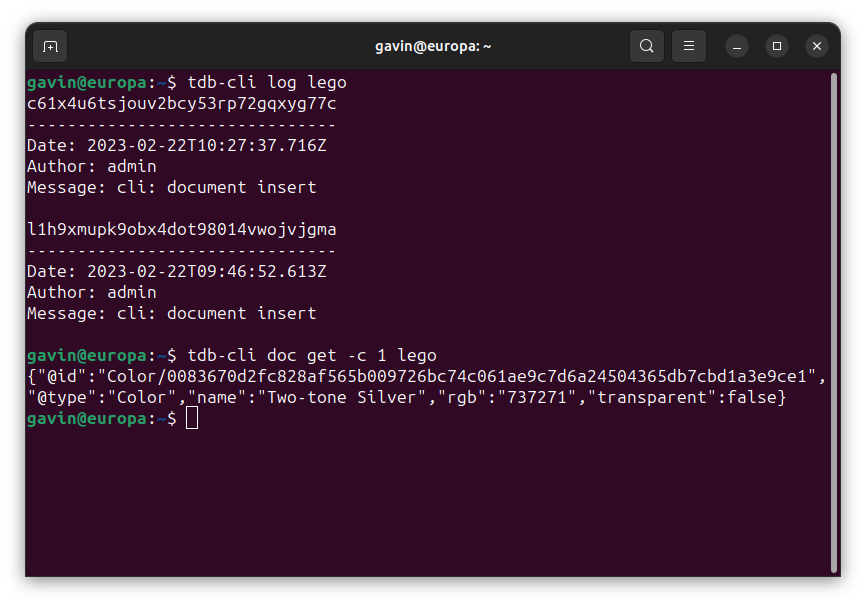

TerminusDB – this is where we think we can make a difference with our software. By providing free and open software that can help with the Dataops team, we provide a platform and services that carry the practices that are already common in software development and operations teams.

We are early in our journey and we want people to get on early. We provide free software and we have a very open community that is happy to help you. Please get on board so we can solve real-life problems with our software.